Manipulation Performance of an Assistive Robotic Arm Control Interface for Individuals with Cerebral Palsy - A Case Study

Cheng-Shiu Chung![]() 1·2

1·2![]() , Kael Williams

, Kael Williams ![]() 1

1![]() , Stacy M. Eckstein

, Stacy M. Eckstein![]() 1·2

1·2![]() , Ian Eckstein

, Ian Eckstein![]() 1·2

1·2![]() , Rosemarie Cooper

, Rosemarie Cooper![]() 1·2

1·2![]() , Rory A. Cooper

, Rory A. Cooper![]() 1·2

1·2![]()

![]() 1

1![]() Human Engineering Research Laboratories, Veterans Affairs Pittsburgh Healthcare System (Pittsburgh),

Human Engineering Research Laboratories, Veterans Affairs Pittsburgh Healthcare System (Pittsburgh),

![]() 2

2![]() Department of Rehabilitation Science and Technology, University of Pittsburgh (Pittsburgh)

Department of Rehabilitation Science and Technology, University of Pittsburgh (Pittsburgh)

INTRODUCTION

Cerebral palsy (CP) is a permanent neurodevelopmental disorder in early childhood and results in serious physical disabilities from neuromuscular deficits, classified as spastic, dyskinetic, ataxic, and mixed [1 ]. Individuals with CP often experience reduced ability in object manipulation in conjunction with disturbances of sensation, perception, cognition, communication, and behavior that result in limitations of activities of daily living (AOL) and participation in the community [2]. Different assistive technologies (AT) were suggested for people with CP to enhance communication, seating, mobility, and environmental control based on the level of gross motor function [3,4]. The assistive robotic manipulator (ARM) was developed to assist in object handling and manipulation, such as dressing, food preparation, eating, and vocational/educational activities [5]. A recent longterm study revealed that the use of ARMs with personal tasks could have a large impact on AOL, participation, and quality of life and may reduce reliance on caregivers [6].

There are two commercially available ARMs on the market, i.e., iARM (Exact Dynamics, the Netherlands) and the JACO arm (Kinova Robotics, Canada) [7]. Literature showed that the ARMs improved user independence and self-esteem by enabling users to complete more everyday tasks on their own (6,8]. In addition, a study evaluated the performance of the original user interface of the two commercial ARMs using clinically validated outcome measurement tools using daily object manipulation tasks [9]. In addition, several control interfaces were introduced to enhance the performance and user experience of the ARM, including single-switch scanning [1 OJ, head mouse [11], touchscreen, voice, vision, and brain-computer interface [7]. These alternative control interfaces were built an in-house developed software running on a computer or a microcontroller that controls the ARM with a significant amount of modification on the ARM and wheelchair. For example, the voice with 3D vision interface [12] included the additional 3D camera mounted on the ARM and wheelchair modification to mount the computer and provide specific power to this computer. Although researchers were starting developing ARM for people with CP [13], the results showed it is still in the early stages of development and there was no performance evaluation from ARM users with CP. Moreover, the abovementioned customized modification on the ARM software or additional devices may cause exclusion from ARM and wheelchair warranty.

This study presents the development and design of an improved control interface to accommodate an individual with CP to enhance the manipulation with ARM. The control interface does not require ARM and wheelchair modification. After the interactive participatory design process to finalize the interface and functions, the control interface was then evaluated with the AOL task performance, usability, satisfaction, mental workload, and interview questionnaires.

METHODS

This study was approved by the Institutional Review Board of the University of Pittsburgh (ID STUDY20050143). A single-case study was conducted with a male individual, who is 52 years old and has mixed CP condition with high and low muscle tone and involuntary movements. He is classified at Level IV on the Gross Motor Function Classification System Expanded and Revised (GMFCS-E&R), meaning that he can perform self-mobility when using a powered wheelchair and Level V based on the Manual Ability Classification System (MACS), meaning he requires assistance in all AOL and only performs a simple movement in special situations. His Quick DASH score was 63.6 which shows significant limited arm and shoulder functions. The control interface mounting location was identified with an experienced clinician to maximize the hand and finger dexterity and reduce involuntary movements so that the participant could stably rest the hand on the control interface. The location identified was next to the wheelchair controller where the participant fully extended the right arm, and the reachable area was within a circle with 50 mm in diameter around the right thumb.

Control Interface Development

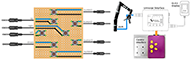

The participant identified the JACO ARM for the control interface development. After experiencing with wheelchair joystick interface to control the ARM through R-Net and failing to perform functional tasks, the participant indicated that the major barrier was that mode switch buttons were located too far from the joystick which is out of reach when using the commercial wheelchair joystick controller. Through iterative participatory design, we made different sizes and dimensions of the buttons, thumb joystick knobs, and the shell to identify the most comfortable options for the participant using cardboards and plastic caps before creating 3D parts. The 3D mechanical parts were designed using SolidWorks 2017, a computer-aided design (CAD) software. The control interface contains a thumb joystick knob and four buttons located about 25mm around the knob. The knob is interchangeable to different diameters. The surrounding buttons have tactile patterns on the top face as physical feedback for the user to recognize by touch. The shell was designed to provide enough space for the participant to rest the palm but not too wide to exceed the width of the wheelchair. The mechanical parts were manufactured using a Connex 3 Objet500 (Stratasys, Eden Prairie, MN) 3D printer and using Vero photopolymers as material. Inside the shell is a circuit board (Figure 1) which contains eight buttons mapped to the button locations. Each button is connected to a tip-sleeve 3.5 mm plug as the single-switch input on the Kinova universal interface device (Figure 1 ). The four buttons in the center were used for controlling the moving directions of the ARM. They could be replaced with a single navigation switch (JS5028 by E-Switch). The other four surrounding buttons were used for other functions such as opening/closing fingers, preset pose, and changing control modes. The control modes allow the user to control the 7 degrees of freedom ARM through the 2-dimensional joystick. Control modes include translation mode to move the ARM in the XYZ direction and wrist rotation mode to rotate the wrist. In addition, the functions of the buttons were programmed through the JacoSoft software developed by Kinova allowing setting up the ARM actions based on the button event. For example, when the up button is holding down, the ARM is moving upward under control mode BO. Also, it allows attaching multiple functions on one button to respond to different events. For example, the ARM changes mode to the next one when button 4 is clicked but move to the ready or retract position when button 4 is held down. Table 1 shows the ARM actions set for the eight buttons with different events.

Control Interface Evaluation

After iterations of the participatory design process, the participant was satisfied with the mounting location, button and knob sizes, shell dimensions, and the button functions of the control interface. The interface was evaluated with AOL task completion performance, usability, and mental workload. The performance was evaluated using the ARM evaluation tool (9, 14], which consists of electronic components that measure task completion time and ISO- 9241 throughput, a factor that is widely used to describe the performance of physical input devices based on Fitts' Law. The components simulate commonly performed AOL tasks, including pushing large and small size circular buttons such as door openers and elevator buttons, flipping a toggle switch, pushing down a door handle, and turning a knob. The task completion time was computed from the releasing of the horizontal start pad to the completion of the task, i.e., pressing a button or rotating to a desired knob/handle angle.

| Button | ARM Control Mode | |||

| Events | B0 – Translation X/Y | B1 – Translation Z/Wrist | B2 – Wrist Rotation | |

| Up | Hold Down | Y Minus | Z Plus | X Theta Plus |

| Down | Hold Down | Y Plus | Z Minus | X Theta Minus |

| Left | Hold Down | X Plus | Z Theta Minus | Y Theta Minus |

| Right | Hold Down | X Minus | Z Theta Plus | Y Theta Plus |

| Button1 | Hold Down | Open Hand Three Fingers | Open Hand Three Fingers | Open Hand Three Fingers |

| Button2 | Hold Down | Close Hand Three Fingers | Close Hand Three Fingers | Close Hand Three Fingers |

| Button3 | Hold Down | Retract Ready to Use | Retract Ready to Use | Retract Ready to Use |

| One-Click | Change Mode B | Change Mode B | Change Mode B | |

| Button4 | Hold Down | Retract Ready to Use | Retract Ready to Use | Retract Ready to Use |

| One-Click | Change Mode B | Change Mode B | Change Mode B | |

After acquiring the consent from the participant, the JACO ARM and the control interface were installed on the participant's wheelchair. The control interface was installed next to the wheelchair controller. The left-handed JACO ARM was mounted on the left side of the wheelchair next to the participant's knee location (Figure 2). Before using the control interface, the participant was interviewed with aspects of the ARM interface. The participant was trained to use the control interfaces to move the ARM. When the participant is comfortable with the interface and familiar with the button functions, the participant was asked to complete each task five times with the control interface. After the completion of all tasks, the participant was evaluated with the outcome measurements and answered the interview questionnaire.

Outcome Measurements

The ARM evaluation tool provides the task completion time. The usability was evaluated using a clinically validated scale, System Usability Scale (SUS). The mental workload was evaluated using the NASA task load index (NASA-TLX). The satisfaction was evaluated using Quebec user evaluation of satisfaction with assistive technology (QUEST) version 2.0. In addition, a questionnaire was conducted to acquire the experience before and after using the control interface.

RESULTS

| Trials | Task Completion Time (Second) | ||||

| Big Button | Elevator Button | Toaale Switch | Door Handle | Turning Knob | |

| 1 | 41.07 | 61.18 | 91.30 | 134.80 | 100.89 |

| 2 | 42.51 | 45.90 | 22.09 | 31.32 | 127.13 |

| 3 | 2.98 | 50.95 | 37.38 | 25.23 | 84.01 |

| 4 | 2.14 | 63.70 | 54.86 | 35.21 | 79.57 |

| 5 | 2.56 | 45.45 | 87.09 | 27.02 | 84.43 |

The participant was able to use the control interface after the installation without any assistance and complete all tasks independently. Table 1 shows the completion time of each trial for the AOL tasks. In most tasks, the participant took longer for the first and second trials but completed faster in the following trials. The participant reported the satisfaction of 4.14/5 on the QUEST, the usability of 95/100 on the SUS, and the mental workload of 24.167 on the NASA-TLX. After using the control interface, the participant rated less anxiety, from 3 to 1 on a 10-point Likert scale, and felt more confident of achieving goals using the ARM, from 8 to 10. The participant was satisfied with the safety, easiness, durability, and effectiveness. The participant reported a medium mental workload on the mental and effort, very low on the frustration and temporal demand, perfect on the performance, and low on the physical demand.

DISCUSSION AND CONCLUSIONS

The participant with CP requires caregiving support in all AOLs. However, the study revealed that the participant was able to independently perform manipulation tasks with the ARM using the improved control interface. This would significantly improve the participant's independence. This study showed that the participatory design process with rapid prototyping using cardboards and a 30 printer helped to accurately identify the end-user needs and effectively communicate with the individual with CP, who is non-verbal. The result of high satisfaction and excellent usability showed that the participant had a good user experience with the control interface. The participant liked the easiness of the control interface with minimal frustration and high-performance work loading in conjunction with the durability and effectiveness in completing AOL. The reduction of the task completion time demonstrated the learning curve of adapting the control interface for the first few trials. It also revealed that using this control interface was faster than the keypad but slightly slower than the 30 joystick compared to previous studies [9].

Although the participant has already tried the control interface several times during the iterative participatory design process, he did not perform any tasks on the ARM evaluation tool during the design process. He only went through the basic ARM control motions such as moving up or down to test the setting of the control interface. Therefore, when performing on the ARM evaluation tool, the participant did not perform in the smoothest trajectories in initial trials. However, the performance of some tasks was still not entirely optimized within the five trials. This learning experience helped to identify the initial barriers in the control interface and how easily and quickly the participant could correct errors or alter different manipulation strategies. For future design suggestions, the participant suggested mounting the OLEO display in a cradle attached to the front of the control interface.

To conclude, this study introduced the development and design process of an improved and effective control interface to adapt the individual with CP to provide independent manipulation with the ARM. The finalized control interface was evaluated with the AOL task performance, usability, satisfaction, mental workload, and interview questionnaires. High satisfaction and excellent usability show the control interface was able to meet the needs of the individual with CP.

REFERENCES

- Rosenbaum P. The Definition and Classification of Cerebral Palsy. Neoreviews [Internet]. 2006 Nov 1 ;7(11):e569-74. Available from: https://publications.aap.org/neoreviews/article/7/11/e569/87112/TheDefinition-and-Classification-of-Cerebral

- BRAENDVIK SM, ELVRUM A-KG, VEREIJKEN B, ROELEVELD K. Relationship between neuromuscular body functions and upper extremity activity in children with cerebral palsy. Dev Med Child Neurol [Internet]. 2010 Feb;52(2):e29-34. Available from: https://onlinelibrary.wiley.com/doi/10.1111/j.1469- 8749.2009.03490.x

- Vitrikas K, Dalton H, Breish D. Cerebral palsy: An overview. Am Fam Physician. 2020;101(4):213-20.

- Zupan A, Jenko M. Assistive technology for people with cerebral palsy. East J Med. 2012;17(4):194-7.

- Laffont I, Biard N, Chalubert G, Delahoche L, Marhic B, Boyer FC, et al. Evaluation of a graphic interface to control a robotic grasping arm: a multicenter study. Arch Phys Med Rehabil [Internet]. 2009 Oct [cited 2012 Oct 16];90(10):1740-8. Available from: http://www.ncbi.nlm.nih.gov/pubmed/19801065

- Beaudoin M, Lettre J, Routhier F, Archambault PS, Lemay M, Gelinas I. Long-term use of the JACO robotic arm: a case series. Disabil Rehabil Assist Technol [Internet]. 2019 Apr 3;14(3):267-75. Available from: https://www.tandfonline.com/doi/full/10.1080/17483107.2018.1428692

- Chung C-S, Wang H, Cooper RA. Functional assessment and performance evaluation for assistive robotic manipulators: Literature review. J Spinal Cord Med. 2013;36(4):273-89.

- Campeau-lecours A, Maheu V, Lepage S, Lamontagne H, Latour S, Paquet L, et al. JACO Assistive Robotic Device : Empowering People With Disabilities Through Innovative Algorithms. In: RESNA Annual Conference. Arlington, VA; 2016.

- Chung C-SS, Wang H, Hannan MJ, Ding D, Kelleher AR, Cooper RA. Task-oriented performance evaluation for assistive robotic manipulators: a pilot study. Am J Phys Med Rehabil. 2017;96(6):395-407.

- Wakita Y, Yamanobe N, Nagata K, Ono E. Single-Switch User Interface for Robot Arm to Help Disabled People Using RT-Middleware. J Robot. 2011 ;2011 :1-13.

- Quintero CP, Ramirez 0, Jagersand M. VIBI: Assistive vision-based interface for robot manipulation. In: 2015 IEEE International Conference on Robotics and Automation (ICRA). IEEE; 2015. p. 4458-63.

- Ka HW, Chung CS, Ding D, James K, Cooper R. Performance evaluation of 3D vision-based semiautonomous control method for assistive robotic manipulator. Disabil Rehabil Assist Technol [Internet]. 2018;13(2):140-5. Available from: https://doi.org/10.1080/17483107.2017.1299804

- Rozas Llontop DA, Cornejo J, Palomares R, Cornejo-Aguilar JA. Mechatronics Design and Simulation of Anthropomorphic Robotic Arm mounted on Wheelchair for Supporting Patients with Spastic Cerebral Palsy. 2020 IEEE Int Conf Eng Veracruz, ICEV 2020. 2020;7-11.

- Chung C, Wang H, Kelleher AR, Cooper RA. Development of a Standardized Performance Evaluation AOL Task Board for Assistive Robotic Manipulators. In: RESNA Annual Conference. Seattle, WA; 2013.

ACKNOWLEDGEMENTS

Funding was provided by VA Center (Grant#B9250-C), the Veterans' Trust Fund, Paralyzed Veterans of America (Grant#3157), and the generous supporters of HERL's Engage Pitt ELeVATE campaign.